I’ll presume you already have an SSL certificate installed to your IIS web server and thus have your web site running with https://www.domain.com. This post is about how to enable SSL for your FTP connections to the server.

Set the SSL cert for your FTP server and site

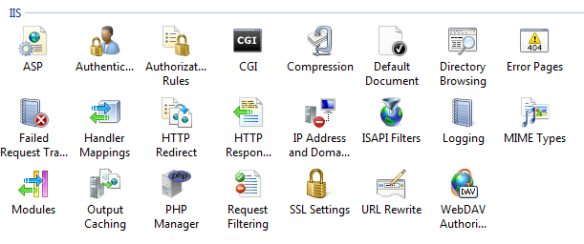

- Go to IIS Manager

- Click the Server name at top of left tree and open FTP SSL Settings icon

- In the SSL Certificate drop down select your certificate

- Check the Require SSL connections

- Expand the list of Sites in the left tree and select your FTP site

- Open FTP SSL Settings icon

- In the SSL Certificate drop down select your certificate

- Check the Require SSL connections

Yes I know we’ve done this in two places, but it does appear to be necessary.

Set the Bindings for the FTP Site

- Go to IIS Manager

- Expand the list of Sites in the left tree and select your FTP site

- Over on the right click Bindings…

- Click Add button, add Type = FTP, IP Address = All Unassigned, Port = 21 and Host Name = http://www.domain.com (as in whatever your domain is)

The following ports work is because you need to specify and open the data ports.

Configure Firewall Ports

- Go to IIS Manager

- Click the Server name at top of left tree and open FTP Firewall Support icon

- The Data Channel Port Range may be set already, if not make it ‘7000-7003’

- Set the External IP Address of Firewall to the public IP of your server

- Go to Windows Firewall

- Add a new Inbound Rule, named something like FTPS for IIS

- On the Protocols and Ports tab select Protocol Type = TCP, and Local Port = Specific Ports, with the range 7000-7003

- Leave Remote Port = All Ports

This allowed me to FTP into my server, with FTPS selected. Don’t forget because you have set the Bindings for the FTP site to your domain, the user name in your FTP program needs to be domain name and user name separated by a pipe, for example http://www.domain.com|userName.